Having experienced weightlessness on board a parabolic flight, as an (aero)space nerd, I am keeping one big dream for the future:

I would like to witness, with my own eyes, the curverture of the Earth as seen from space

Sadly, I am conscious of the fact that this goal cannot be reached without massive funding or support from outstanding individuals, such as Richard Branson or Elon Musk, that would offer me a ride.

Therefore, I am left with a poor man’s option – nonetheless challenging in nature – to launch a stratospheric balloon to the upper stratosphere at approx ~35km altitude and have my own eyes replaced by panoramic digital imagery. This goal can be reached with creative mechanical and digital engineering, some limited paperwork for air traffic control authorities (I am a pilot already – even excessive paperwork cannot scare me) and a budget roughly 2000 times lower than a ticket on Sir Richard’s Virgin Galactic.

For sure, I’ll get less bang for the buck, but I reckon it will be worth the effort !

This has actually been my entry project into IoT/µC-based digital electronics and of course I discovered that, in order to do this cleanly, I need to research and overcome some fundamental hurdles that led me to initially further other projects

Here are the main requirements and challenges met:

- reliable, low-power flight control unit

- continuous reception of 3D GPS position data and derivatives

- monitoring of inside/outside air temperature in a [-60C, 30C] temperature range

- long range (up to 40km) telemetry at reasonable data rates to transmit position and status

- panoramic color imagery with onboard storage of image data, possibly even limited downlink caps

- trajectory evaluation for landing site prediction

- asynchronous, periodic, fail-tolerant execution of onboard tasks

so far, these have been addressed as follows:

- while some people chose Raspberry-Pi as an easily maintainable unix platform for flight data management, a Pi’s power consumption and system overhead regarding interrupt handling and bare bones use of digital interfaces, led me to chose the Arduino-type advanced Teensy 3.6 32-Bit ARM Cortex-M4 180 MHz CPU as core for the flight hardware

- There is a range of light-weighted GPS devices available and I chose the ublox neo-6M GPS connected to Teensy via a serial interface. It is possible to adjust GPS mode during operations, switching from ground-based ranges of position and velocity to “space”-mode operations.

- Two Dallas DS18B20 digital temperature sensors fit to my range requirements and are attached to Teensy via single-wire serial interfaces with phantom power.

- Many people used to operate long range telemetry with 434Mhz frequency shift (FSK) modulated RTTY at rates of 50-300 baud and 10mW output. Apart from the transmitting unit being sensitive to temperature induced shifts of the carrier frequency, the receiving part is an adventurous undertaking, using SDR (software defined radio) and TTY decoding software – pretty much as radio amateurs decoding messages from the first generation of satellites. I got it working but it left a fragile impression on me which let me to switch to LoRa digital radio modules. Those are highly reliable, inexpensive, lightweight transceiver units that operate with multi-spectral modulation, sophisticated error-correction and multiple selectable bandwidth and packet length. Eventually I chose HopeRF 868Mhz SX1276 on both ends, an airborne ground plane 1/4 lambda antenna and a custom build (many thanks to Oleksandr from Ukraine !) Yagi antenna connected to the ground receiver. This is hassle free and solid hardware and initial tests inside a building and over a few hundred meters (with obstacles) were successful. A line-of-sight long range test, yet needs to be performed (most likely: Kalmit 49.321N 8.083E – Oberflockenbach/Weinheim 49.502N, 8.723E).

- Imagery will use two back-to-back mounted 2MP arducam OV2640 interfacing with Teensy on both Serial + SPI. I plan to rotate the camera assembly using a servo to rapidly step to 3-5 positions within the 180° hemisphere. Image acquisition requires approx 80-100ms per position with memory buffered storage to the Teensy’s SD card (8GB). Unlike other people, I am reluctant to launch expensive and heavy GoPro hardware and renounce to video recording. Moreover, with an amateur budget long range image or video transmission at acceptable bandwidth unfortunately is not feasible.

- GPS data is fed into a 120s buffer at 2s time intervals and a 3D linear regression solution is computed using a eigen-vector / matrix decomposition library that flawlessly runs on the Teensy. With the trajectory vector calculated, landing site is calculated from its intersection with the terrestrial plane and data is included in telemetry at payload decent (after helium balloon burst).

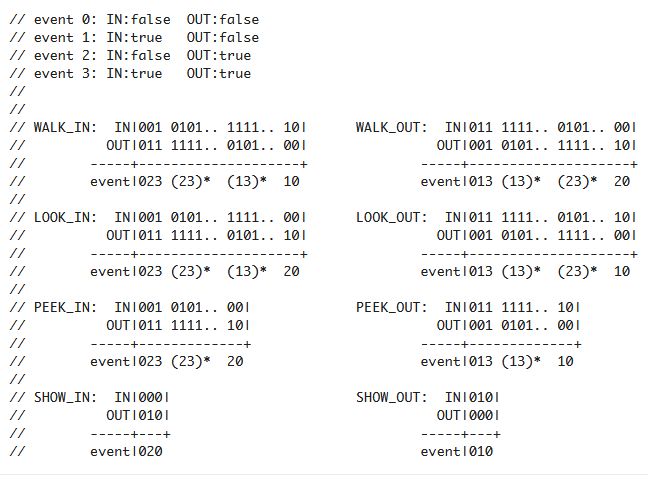

- One of the existing elements of programming activity is a custom library called myTaskScheduler. It allows to register independent services to be triggered at repetitive absolute (NTP- or GPS-time based) and relative time intervals. Support is provided to automatically process (average, sd, min, max) time series data collected by the registered services. Moreover, myTaskScheduler is able to perform health checks on services and if necessary respond to failures in a defined and reliable manner – without sending the global system into deadlock.

next steps (in a long way still to go …)

- integrate LoRa and imagery control software with myTaskScheduler

- wrap myTaskScheduler into a conditional “flight plan” logic to determine activities on launch, ascent, decent, landing.

- develop software for the hand-held receiver (recovery) unit (LoRa + ESP8266), telemetry aggregation and data display on a dashboard powered by Grafana

- consolidation of flight hardware on a custom-made PCB (the current wired setup not being reliable enough for field testing)